Getting Started

This start-up guide supports the VerifiedVisitors CoPilot in our portal and gives you all the information you need to start managing bots on your live API and Web endpoints. By following these 6 simple steps you’ll be able to manage your website visitors.

Prerequisites

Please refer to the documentation for setting up your integration and return to the portal once this is successfully completed.

Once you've registered for an account and configured your integration through the 'add site' process, please check that the VerifiedVisitors platform is processing web requests.

You can check this using the Ingest Status tab on the top-right corner of the Command & Control page after selecting the domain you just added. A green tick indicates a successful integration.

Note that it may take a short amount of time for weblogs to be displayed in the portal. This is expected, however if this persists for longer than 5 minutes from first adding the domain, please check your integration and that your site has traffic visiting it.

Phase 1: Learning

The first phase of using VerifiedVisitors requires no input from you. It's an observation and learning phase, where VerifiedVisitors begins to classify each of your web visitors into categories, so we can better manage your traffic.

The VerifiedVisitors AI platform learns as it observes your web traffic and builds up a detailed picture of your site's traffic. If your site is not under attack, it's best to allow a few days to analyse the incoming traffic and allow the AI platform to perform its analysis. Depending on the volume of traffic, it may take a day or so to have sufficient data.

If you're under attack right now, skip ahead to the last section of this document to quickly enable some blocking rules for potentially high risk automated visitors.

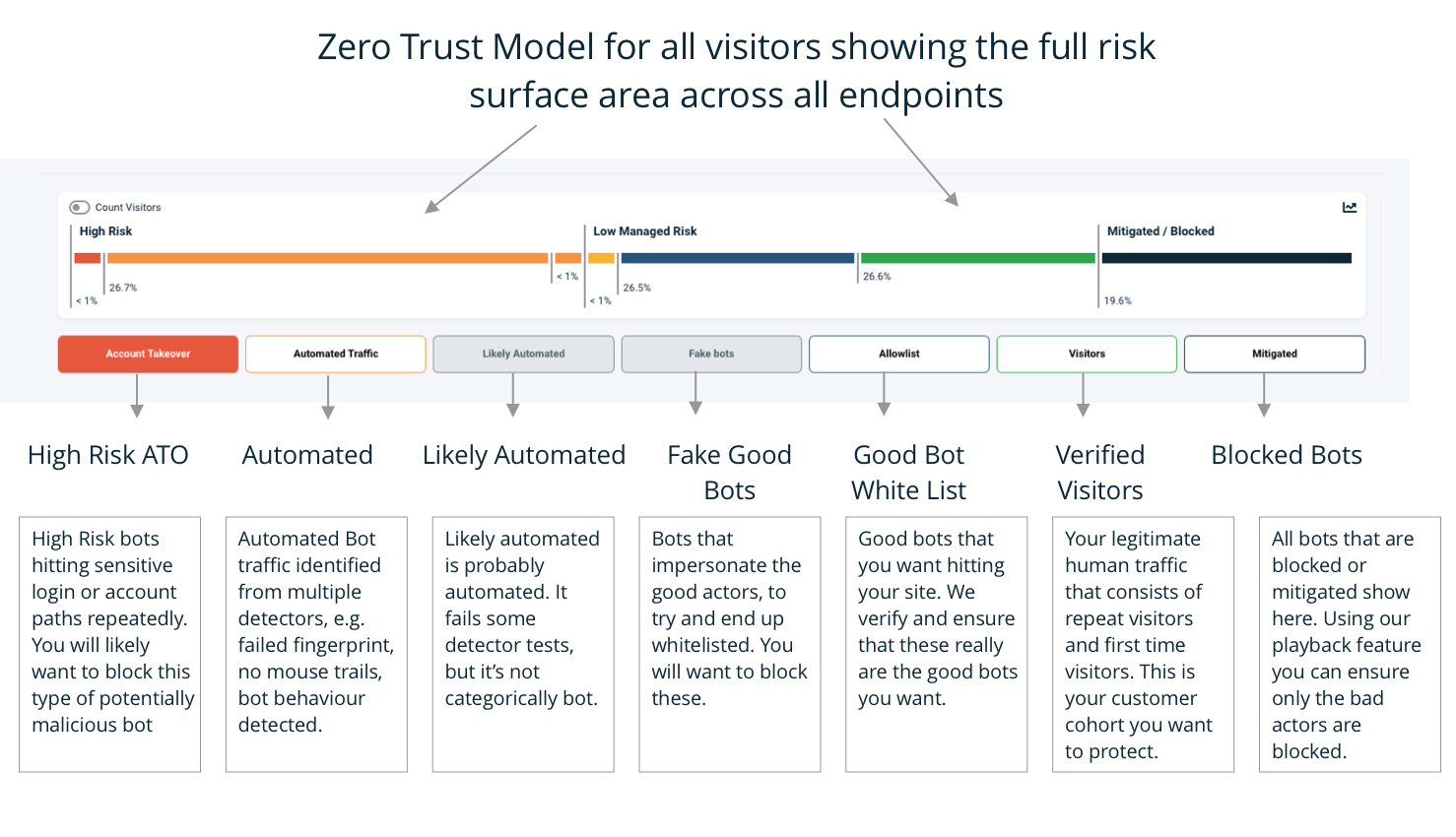

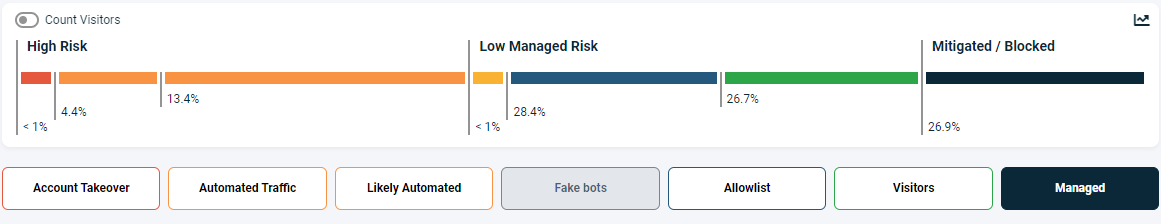

In the Command & Control page you will see the risk categories as shown below. This categorisation allows you to see each visitor by threat type. The risk categories are organized so that high risks are on the left, Low or Managed risk are in the middle, and on the right we have the remaining verified visitors and managed/blocked bots which no longer represent a risk.

Phase 2: Managing bot services

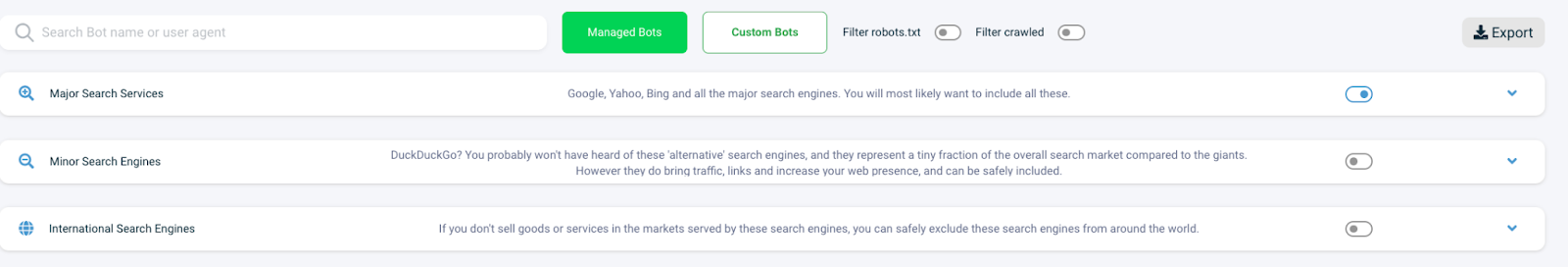

First we want to manage the good commercial bots, such as GoogleBot, that you want visiting and indexing your site. VerifiedVisitors has over 40 categories of bots, and a recommendation engine that you can use to accept the recommended bot categories. This will allow you to build your good bots allowlist very quickly.

Configuring your allowlist

Click on the Allowlist category selector and it will take you to the bot service allowlist.

The top of the allowlist section includes two additional filters:

The 'Filter crawled' toggle allows you to see just the bots that have recently crawled your site. You can check against the bot database and decide which bot categories to allow or disallow, or if you so choose, per individual service by expanding each category.

The 'Filter robots.txt' toggle will show you matching services from your robots.txt file and allows you to sync this with the VerifiedVisitors Allowlist.

If you have built up a large robots.txt file over the years, this is very useful as it allows you to set VerifiedVisitors to actively manage matching bot services from your robots.txt file. We’d recommend you still keep a robots.txt file in place for the good bots that do obey it, however it's much more effective to manage bots using VerifiedVisitors as we manage visitors who masquerade as a legitimate service, and who will not obey your Robots.txt file.

Custom bots

If you have custom bots such as uptime checkers, account integrity checks, or other automation with a User Agent specific to you, you should add them to the access list using the Custom Bots page. This allows you to set the verification methods, for example IP range, user agent, or other unique element to ensure the custom bot can be verified. Once you have created your custom bot(s) they can be added to each domain independently and allowlisted.

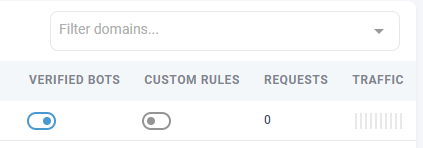

Enabling your allowlist and blocking fake bots

To enable your Allowlist simply toggle the Verified Bots switch to on in the domains table for the domain you just configured:

Fake bots are impersonators masquerading as well-known legitimate services, hoping they will get allowed. They are by definition bad actors, and are blocked by default when the allowlist is enabled for the site.

When you enable your bot allowlist, VerifiedVisitors will verify and allow the services you have selected and block any services you did not select. This can help you further reduce unwanted visitor traffic.

Phase 3: Vulnerable and Critical paths

In terms of our threat levels, account take-over bots are amongst the highest risk to your business . They are actively targeting account login paths, and have failed our detector tests which show they are definitely automated.

This attack type is one that can't be tolerated. You need to actively block this traffic at the network edge, before they have a chance to do any damage.

By default, VerifiedVisitors attempts to analyse critical login paths such as admin logins, payment endpoints, APIs, and other vulnerable paths, which will detect many real and speculative attacks.

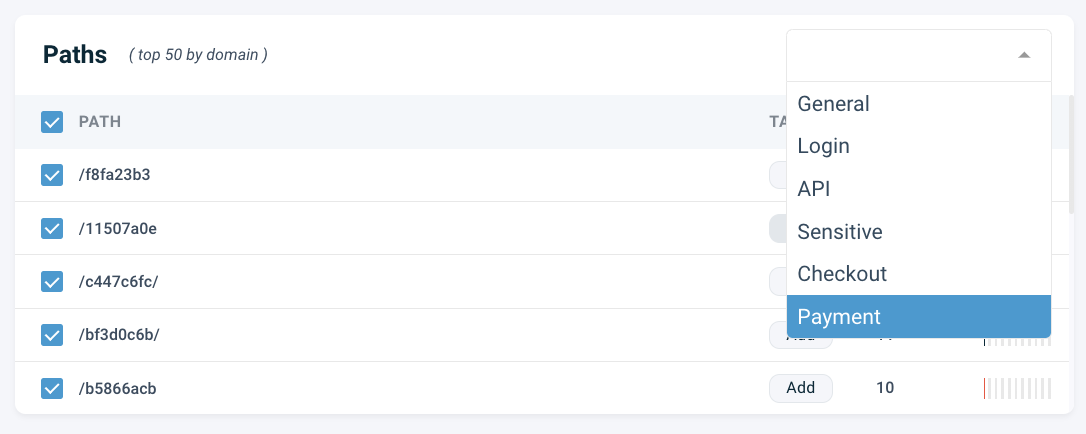

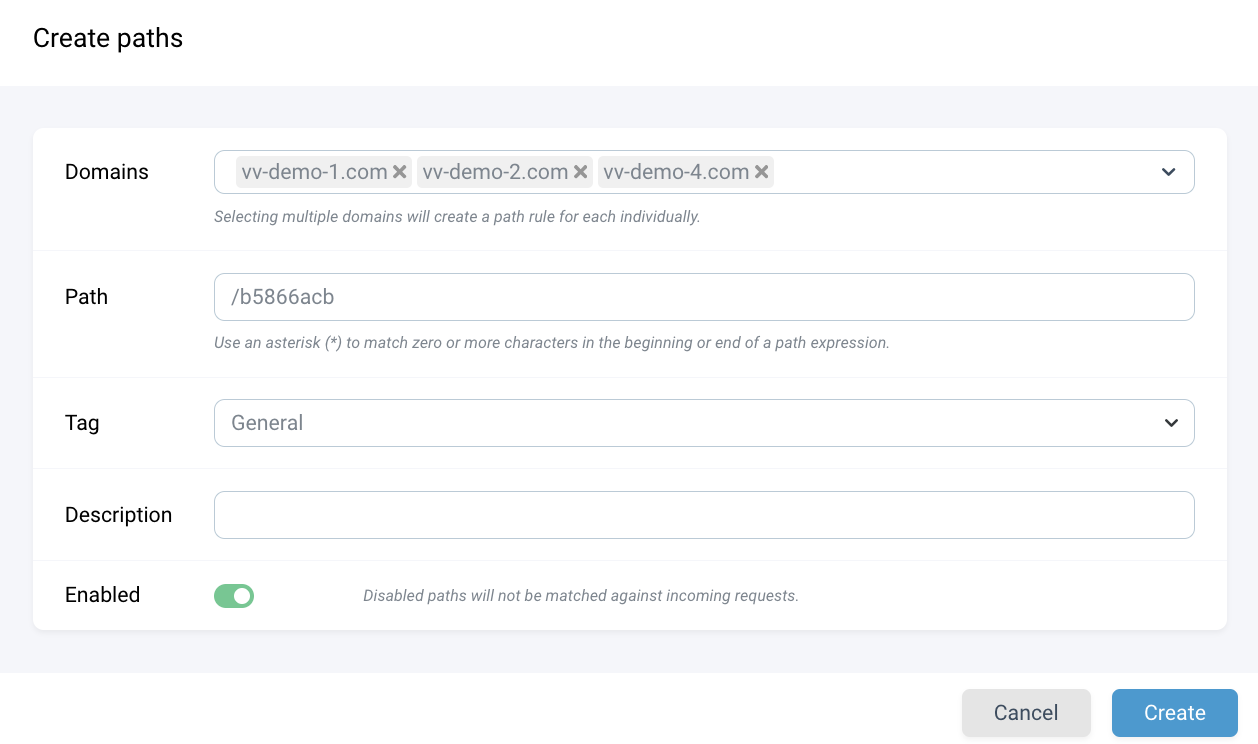

You can tag paths you know may be sensitive by directly clicking on add next to the path in the table and selecting an appropriate label for it, or via the Paths page where you can manage all your path tags. You can create as many path tags as you'd like, but it's not necessary to tag every single one.

Creating tagged paths in this way can be useful when it comes to creating targeted dynamic rules for your particularly sensitive paths.

Phase 4: Active Management

Any visitors are managed based on behaviour. Visitors are grouped into behavioural categories (e.g. ATO or Automated) and can be managed using rules. This section explains how to configure these rules and allow VerifiedVisitors to enforce them.

Preparing a rule

Let’s take an example of an Account Takeover rule to prevent malicious traffic from accessing your login paths. Note: the following steps are applicable to any category.

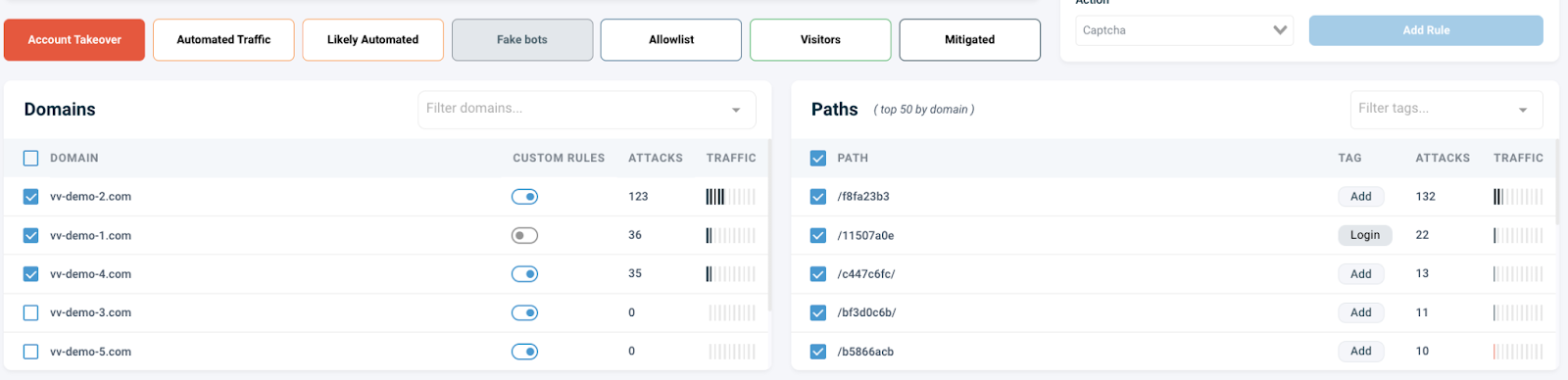

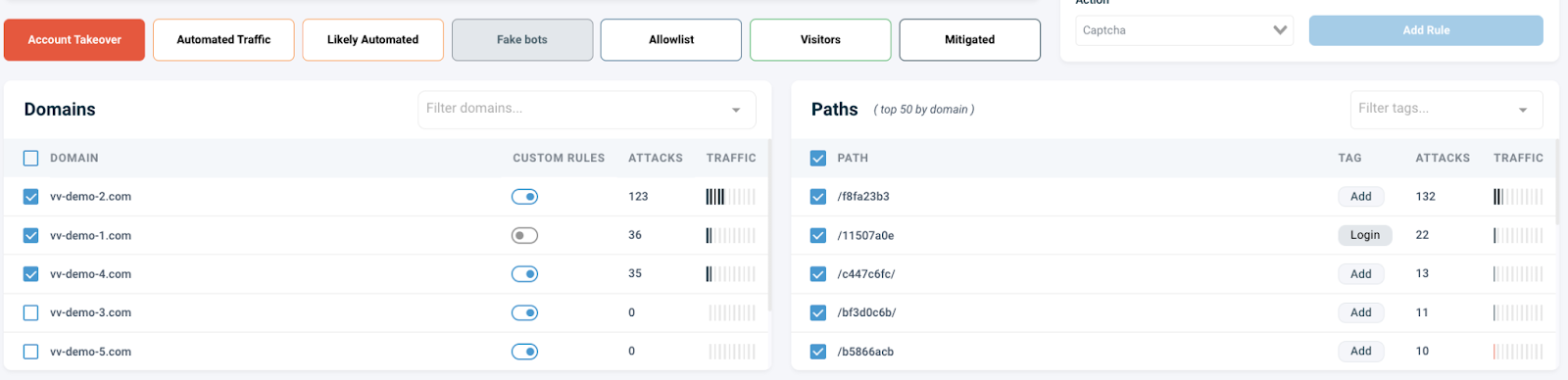

In the Command & Control page, select the red Account takeover button as shown below if you have ATO traffic.

Selecting this high risk category shows you exactly where these potentially malicious bot types are attacking across the selected domains. If you have multiple domains you can see at a glance which domains have ATO bot activity, giving you the flexibility to create a dynamic rule for all your domains, either all at once or one at a time.

For each selected domain you can see the top paths that are being actively targeted by ATO bots to the right of the page. If you want to target specific paths, simply select them here, otherwise your rule will cover all paths.

For most users this is all you need to do before creating a rule. Selecting the threat type, domains, and key paths will form the basis of your new rule, and allow you automatically stop these malicious bots no matter how they change their attacks for good.

Reviewing the effect of your pending rule

Before creating the rule blocking traffic, particularly in heavily regulated business environments, it's important to understand the effect of a rule before applying it.

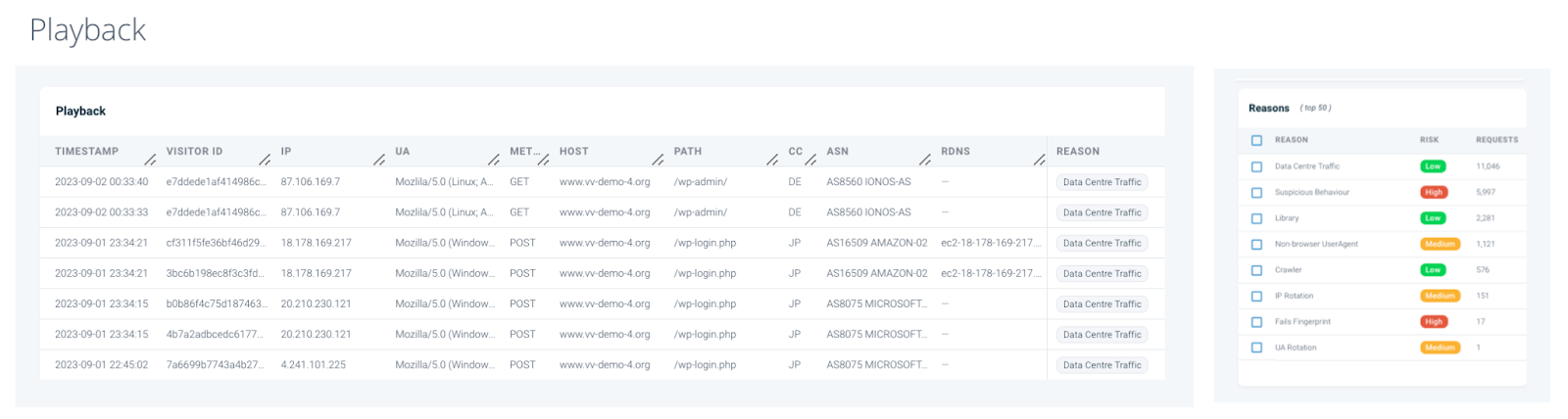

The VerifiedVisitors playback feature allows you to test out the effects of your rules before you enforce them and affect live traffic. By reviewing the visitors who would have been impacted by a rule you are able to adjust it until you're happy with the results. This data can be exported for review offline if you prefer to do this.

This view displays a detailed breakdown of each web request, along with the reason why the visitor was identified as automated bot traffic. Once you are 100% satisfied with the results, you can proceed to create the rule and choose the blocking method.

Naming and enforcing your rule

Now that we've identified potentially malicious bots crawling on sensitive paths, it's time to create dynamic rules to stop the unwanted bots for good.

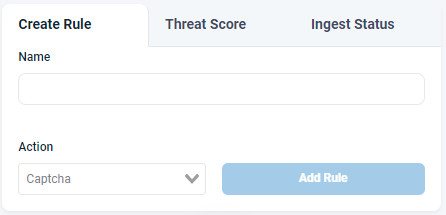

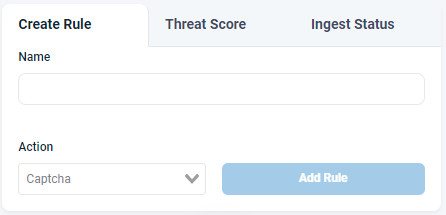

In the Create Rule tab on the top-right corner of the page, simply give the rule a name, select the preferred mitigation from the list, then click Add Rule to create it.

VerifiedVisitors offers two main methods of blocking at the network edge: either blocking outright or serving CAPTCHA.

Serving CAPTCHA is often a good choice as it allows us to further monitor bots as they attempt to solve the CAPTCHA, which most cannot do. It also allows a real human the opportunity to verify themselves if they have been incorrectly identified as a bot.

Reviewing and modifying existing rules

Your created rules can be found in the Custom Rules page, where you can edit, enable/disable, and delete existing rules.

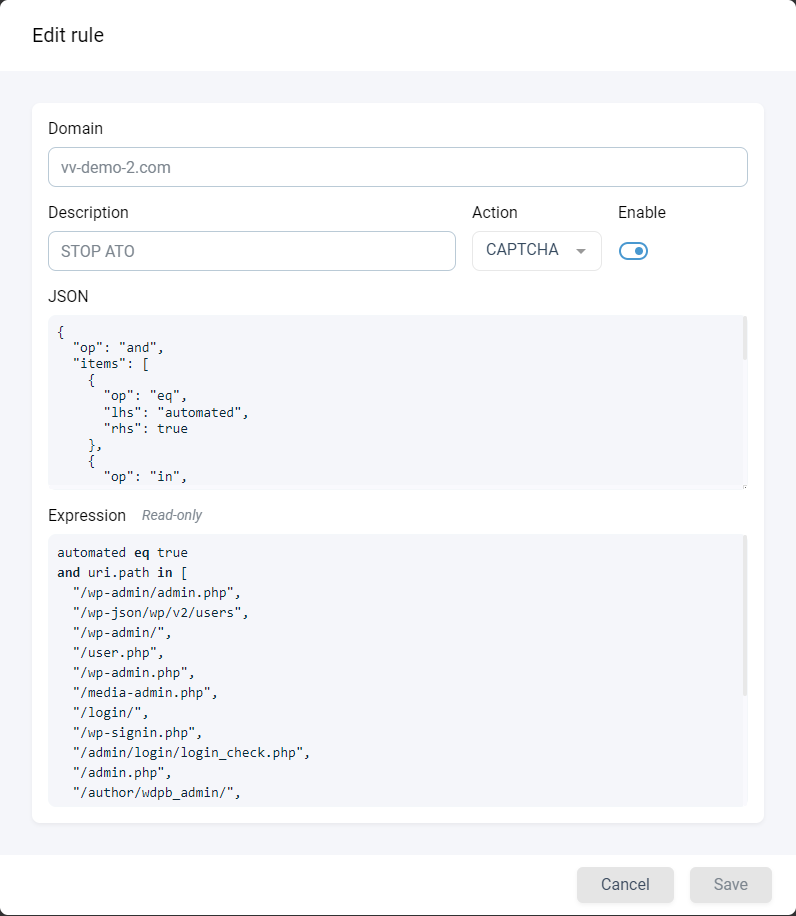

Clicking on the Edit Icon for a rule will present a dialog where you can configure the rule.

In the example below we've created a rule to serve CAPTCHA to automated visitors on a select set of paths:

Here, you can update the rule name, use the drop-down menu to choose the action/mitigation type, and enable or disable the rule. You can also enable or disable individual rules from the table view if you want to isolate or test individual or combinations of rules.

For more advanced users, the dialog also shows a preview of the rule expression so you can understand exactly what is being done, as well as the source JSON, which can be edited for full customisability.

Automated traffic / likely automated categories

In the above example we created a rule targeting ATO. The same process can be used to handle traffic in the Automated and Likely Automated categories (depending on your site these may be more likely to be detected from Day 1).

Automated visitors have failed our core tests and have proven themselves to be bots, and can represent a real threat. Blocking is a good option for these, as we know this traffic is definitely automated. VerifiedVisitors's rules can dynamically target visitor categories, not just specific visitors - so once you configure a rule to block automated traffic for particular areas of your site or service, it's blocked, no matter how the bot tries to rotate through user agents, IPs or disguise its origins.

The likely automated category includes visitors which exhibit suspicious behaviour and are likely to be bot driven, but it’s not absolutely definitive. They may have partially failed some of our tests, but passed others. For example, the visitor may originate from a data center but show no other signs of automation.

Phase 5: Reporting

At this stage, your traffic should be under control. You've set up your allowlist, blocked any fake bots, and put in place rules to block unwanted bot traffic from key paths. Now, the identified risks should constitute a smaller percentage of your traffic, and all the legitimate verified visitors and bots are managed. You can review which visitors have been managed by clicking on the Managed category.

What's left should be actual human pristine traffic that creates value for your business and gives you verified user metrics that you can trust, to support customer UX improvements and A/B testing methods for new services.

Phase 6: Alerts and Thresholds

Once the dynamic rules are in place, and VerifiedVisitors is managing your bot traffic, it's time to look out for any potential anomalies in traffic patterns that could be indicative of further bot traffic.

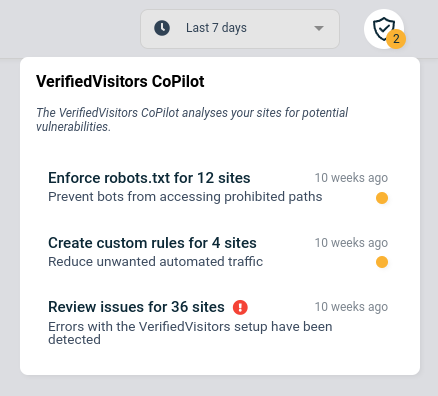

The VerifiedVisitors CoPilot gives you alerts, tips, and suggestions for improving the overall security and performance of your websites. Click on suggestions to drill down and find an explanation of any current issues and their solutions. Alert notifications can also be set up via webhooks and Slack.

What to do if you're under attack

If you have just subscribed to VerifiedVisitors because you are currently experiencing an attack and need to mitigate this urgently, follow these simplified steps to set up mitigations for the most high risk visitors detected.

Let’s take an example of an Account Takeover rule to prevent this malicious traffic. In the Command & Control page select the red Account Takeover button as shown below (if you have ATO traffic).

Then, select the domain(s) and paths you wish to protect, and create the rule via the Create Rule tab in the top-right corner of the page. Give the rule a name, select a mitigation, then click Add Rule. Finally, enable the Custom Rules toggle for the domain(s) in the domains table, and you’re done.

You can repeat this process for the Automated and Likely Automated categories if necessary.

In time sensitive situations this is all you need to do. You can come back later to review what was mitigated and to adjust your rules and mitigations when you are not under pressure.

Start at Phase 1 from where you left off for a more in-depth guide to fully optimising your VerifiedVisitors implementation.